When Bzolrlg was hungry, he was also angry. In fact, just as their names are incomprehensible and often change in time, troll emotions were out of whack as well, so if trolls had any interest in cataloging feelings they would have one called hanger, combining their two most natural states: hunger and anger. Blzorg did not have an interest in emotions, though, he just wanted to eat and the microwave oven was cooking the food too slowly. With typical troll logic, he decided to hurry up the process by bashing it with one giant harry hand. He called them both Harry and it doesn't really matter which one was it, anyway. The important part is that the device was smashed like the cheap orcish knockoff it was.

It so happens that the distorted shape of the oven serendipitously concentrated microwaves from one end to the other, creating enough force to generate thrust and pushing the entire wreck up in the air. Blzarg decided to hang on to his food, which meant hanging on the microwave, which meant going up as well. The force generated by the tiny magnetron should not have been enough to lift the entire oven and the giant creature holding it - not without propellant anyway - but the strength of the troll's blow didn't only deform the interior shape of the microwave, it also tore down a wire, thus generating enough computational error to allow not only for the force, but also for its conservation for after the power cable was torn from the wall socket.

Thus, Blozrg went through his hut's roof, up into the atmosphere, further up, reached space and continued up until up made no sense and further still, until up became down again and he crashed into the Moon. Bzorlg survived - trolls are sturdy like that - but his food didn't. His hanger overwhelmed him, causing wide spread devastating damage to the population of N'na'vi living there. In fact, he just continued to go into a straight line, punching and smashing until he reached the same spot where he had landed and then he continued on anyway. Meanwhile, the inhabitants, overly confused by the entire incident, decided to place food on the line the troll rampaged on, so that he doesn't feel the need to change direction. This, incidentally, explains why the Moon has a ring of dust when you look up at it.

The general council of the N'na'vi held an emergency meeting after the confusion turned into acceptance. They needed to understand what had happened, which was, by all their knowledge, impossible. First of all, the Earth could not sustain life. It was mostly blue and the N'na'vi, divided as they usually are, were united in hating the toxic color. It was one of the reasons why they lived on the other side of the Moon, so they didn't suffer all kinds of ailments having to look at the horror in their sky. Obviously, living on the surface would be impossible. But even if they could have conceived of creatures that would withstand the color blue, surely they would have been destroyed by the layer of corrosive atmosphere containing oxygen or drowned by the huge quantities of water in it or squashed by its enormous pressure. In the old days there was something called religion which posited that all bad N'na'vi would go there to suffer eternal blue torment, but reason had since triumphed and such preposterous beliefs were beneath even a child. It was as ridiculous as believing life was possible on Neptune!

The conclusion was obvious: the troll, if it even existed, was not alive but a natural phenomenon, akin to the cloud of disintegrating comets that was always changing the planet Earth. Once every few decades curiosity got the better of the N'na'vi and they sacrificed some of their scientists, forcing them to look at the planet with a telescope. The changes were always great, so great in fact, that the logical explanation seemed terribly improbable. However, using N'hair's black hole theorem, it was proven that it was the only one: cosmic impacts were continuously reshaping the Earth, probably helped by the corrosive atmosphere, causing not only the weird structured shapes they observed - some seeming to move for a long time before stopping due to the energy of the impact, but also the massive changes in atmospheric particulates and global temperature.

Even so, something had to be done regarding this land orbiting troll phenomenon, which meant scientists would need more data. They already had Small Data, Big Data, but in this case they needed more, as clearly what they had was not enough for the massive computational machines of the N'na'vi, so they decided on organizing an expedition to the planet Earth.

Clearly, it would have been too expensive - and blue - to send real N'na'vi on the planet, so they started constructing a fake N'na'vi, one that could withstand the air and the water and even be able to destroy pesky comet fragments threatening it. It wouldn't have worked on the troll, naturally, since as far as they knew, he might have been indestructible and they couldn't risk damaging the Moon. They christened the fake N'na'vi as N'N'na'vi, because even if it was, it wasn't. They worked as hard as they could, yet the N'N'na'vi still hated the color blue, so they had to dismantle its eyes. And therein was the problem: how could their machine tell them what was going on down there without images? In a rush, they decided to install two spectrometers instead of its eyes: a near Infrared one and an X-ray spectrometer. Thus, N'N'na'vi could determine the composition of items on Earth.

Leaving the Moon was relatively easy, all you had to do was jump high enough. Landing on Earth was a problem, but N'N'na'vi was sturdy. The inhabitants of the Moon had studied the troll and had built a skin analog for their explorer. The radiation belts around the planet were a much bigger issue, since they knew not if they would affect N'N'na'vi. Fortunately, science being so very advanced on the Moon, they had also studied the belts for a long time and they had discovered a way through: all they had to do was unbuckle the belt as they went through, making sure to buckle it back when safe. It was a very risky proposition, though, as any failure could leave the belts unbuckled, free to fall away from Earth and let the blue escape, hitting the Moon. It was such a large risk that the mission almost didn't go through. Yet a courageous N'na'vi scientist, only living survivor of previous Earth surveys, wearing a patch over the eye he had used to look onto the planet, spoke up. Only a dozen had ever gazed upon Earth and most had succumbed to the terrible color.

"How can we be sure that another troll won't arrive on our world? Maybe an even bigger, meaner one? One that could bring an end to the N'na'vi. You know why The Man on the Moon is gone? Because he couldn't make a bit of a difference, even if he had known of the terrible faith awaiting him. He didn't have a space N'N'na'vi, that is why! We need to find and catalog all the trolls, at least the big dangerous ones, before we end up like him!"

The speech was inspiring and so the project moved on to the launch phase. N'N'na'vi jumped and headed towards Earth. It would have taken around two days to get there, plenty of time to observe the planet as it approached, yet misfortune made it so that an Elven rocket stumbled on the same trajectory of the exploring machine. Mistaking it for a cometary fragment, the N'N'na'vi destroyed it, thus causing widespread panic on Earth.

Elondriel stood up in the council room and calmly, coolly, yet with an occasional weird nervous laughter that expressed the strongest elven emotion there was - slight annoyance, started speaking. He spoke to the people gathered hastily to address the issue of the invading space fleet that had destroyed a rocket, but started with a joke. He glided to the middle of the room, in that slightly lilting way elves use to declare the inner energy they choose to restrain out of politeness and civilized social responsibility, he looked at Bazos the dwarf and said the words "at first I thought the dwarves might have something to do with this". He laughed the small laugh and continued: "But they couldn't possibly have gone beyond geosynchronous orbit". The dwarves threw poisoned looks at the speaker while pretending to smile, as befitting their natural competition against elves in all things technical.

"However, it seems that the threat is, indeed, extraterrestrial. Therefore I believe it is obvious that the rocket was not destroyed as an attack against my company, but against the entire planet. So while I will certainly need to be compensated for the loss, the countermeasures should be taken by all of us, as a united front, for if we don't act now against this threat that apparently originated on the Moon we are all in danger. Elves, dwarfs, humans... even dark elves, " he said glancing toward to group of secretive dark haired people conferring in a corner, slanted eyes betraying their deep suspicion of the speaker, " we all must band together and fight back!".

"A preposterous idea," jumped the human representative. "There is no water on the Moon. How could anything but the most primitive life survive there... in that vast desolate gray desert?".

"I only said it appears to have originated on the Moon," continued the elf, "but surely it must have come from further away. Probably Mars. I believe we need to go there immediately! But first, let's decide how to manage the threat coming from this space machine"

The troll representative interjected in hanger: "Members of the council, the solution should be obvious to you all: we should nuke it!", causing everybody to speak at the same time, being either strongly for or strongly against it. It so happened that the crisis was unfolding at the same time when a very rare yet powerful mineral had been discovered, promising fission bombs that would dwarf in power - pun not intended - even fusion bombs. An unopinium nuclear explosion would certainly have been enough to destroy the alien invader, but the polarizing radiation emitted by the material made any consensus on the issue almost impossible.

An ent raised a branch, focusing attention on her large wooden body. She was an olive ent and the representative of CGI, the union of lesser races. Immediately she tried to suggest a diplomatic approach: "Perhaps instead of jumping to hasty reactions alternatives should be considered. Surely a committee of the Union races could appoint a group of highly specialized experts to a research center that would analyse the ... errr... entity and propose communication solutions to be then discussed in the Middle Earth Commission". This stopped everybody in their tracks, causing all to think hard upon the ent's words. It took them several minutes to understand what was actually said and some more to try to figure out what the words meant.

"You mean talk to it?", a vampire interrupted the silence. All present knew of the provincial directness of races living near the dark forests of Transylvania, but they all felt a bit offended at the curtness of the sentence. In civilized high council meetings, phrases needed not only weight and gravitas, but length as well. Otherwise, who would take them seriously? Yet vampires were known for their ability to find solutions where others did not. Hidden in dark places, away from the light of the sun, they devised ingenious things that profited many. And all they requested in return was blood, which was always enough and cheap to boot. "Yes, I did mention the word communication, didn't I?", replied the ent, olive branches all crossed over her trunk.

"You are so right, of course, madam representative," the human spoke again, "but we must consider the budget. Nuking the intruder is certainly cheaper than talking to it, not to mention faster. Communication with the alien is the responsibility of SETI - perhaps not even them, since their purview is searching for extraterrestrial intelligence, not actually communicating with them. Their budget is limited and we need to discuss in congress if we want to increase it. Defense, on the other hand, has enough budget and discretion - since from the Constitution everything is under their purview". "The Human Constitution," he added hastily when eyes suddenly turned dark towards him, making sure his words conveyed the big capital letters he meant. "Wait, what are you typing there?" he asked the vampire, alarmed.

"You said she was right, so I sent a ping to the alien craft using the protocols of the emergency radio broadcast system. Something like 'Hi!'", the vampire replied. Vampires were not considered a separate race, since originally they had started as humans, but not really human either. However, that meant that the human representative was responsible for what the hacker did. Red faced he asked in anger "How did you know the secret code required to activate the broadcast system?!"

"Oh, that wasn't an issue. Defense generals kept forgetting the password so they reset everything to eight zeroes. Even nuclear launch codes!". The troll made half a move to enter the conversation, but thought differently once everybody threw him severe looks. He shrugged, dejectedly.

Chaos ensued in the discussion, people trying to shift blame from one to the other. Meanwhile, N'N'na'vi heard the message loud and clear. It just didn't get it.

The N'na'vi machine had already carefully passed through the radiation belts, carefully buckling them back, and now headed towards the planet. Unfamiliar with atmosphere reentry, the Moon civilization had neglected to take into account the overheating of their machine, but the natural shape of the N'na'vi - also imparted on the explorer - accidentally eliminated the threat. The reentry heat just gave the amalgamation of thin tentacles a slight glow while slowing N'N'na'vi to a delicate float. All the residents of Earth could see it shine brightly while descending towards the surface, causing wide spread panic and despair, only exceptions being children too young to understand, geezers too old to care and Pastafarians, who actually got to rejoice.

In its descent, N'N'na'vi intercepted a clear radio message which it immediately ran through the complex translation machinery it was equipped with by the brilliant Moon scientists. "High!", the message said. "Yes, I am high!", it answered, unable to determine the source of the message, but assuming it came from its creators. Pastafarians rejoiced yet again, to everyone's chagrin. Communication stopped for a while, then another message arrived. First translation started with "We, the people of Earth...", which it immediately dismissed as incorrect, since there could be no people on Earth. The only people it knew of where the N'na'vi, whose name literally meant "Not those people", for reasons forgotten by history. Still, people. It got even more confusing when a dwarven rocket launched in order to get more information about the alien machine. N'N'na'vi did a spectral analysis of the rocket, as it didn't behave as a cometary fragment at all: it rose from the ground up in a way that orbital mechanics could not explain. The analysis determined that the surface of the object was stained with the color blue (translating mechanisms even suggested the shape of a word that could have meant blue). Terrified, N'N'na'vi destroyed the rocket.

Moving its tentacles, the machine learned to guide its descent, marginally avoiding hitting the water - which would have been disastrous - and instead crashing at night in the desert. N'N'na'vi had here the opportunity to calm down as everything looked - so to speak - as expected: sand everywhere, which its instruments analysed to be a safe yellowish white, the air relatively dry for the hellish planet, the surface even showing signs of cosmic impacts. Back home, scientists from the control room felt just as calm, maybe a little bored, as they had hoped some of their well established theories would be challenged, so that they would get to prove them all over again.

Chaos ensued as the first helicopter arrived, luckily a nice radar scattering black, as the fake N'na'vi had determined there was life on Earth, which pushed everybody in a frenzy to determine the likely method in which the programming had failed so severely. After a brief hope that the "safe mode" of the machine would somehow determine the flaw and fix it, communication was interrupted and the mission scrubbed. N'N'na'vi was clearly beyond salvation and a new mission needed planning.

It was lucky that the landing had been during night, as the international council has decided, in the hope that the machine would prove as violent and destructive as it had been that far, to send the Transylvanian vampire to continue efforts to pacify the alien. It would have been ridiculous to die of sun exposure before getting to see such an interesting thing. Humans called the vampire Nosferatu, unable to pronounce the name correctly. In reality, his name meant "annoying one" in the vampire native language and he had always, proudly, lived up to his name. Less brutal than most of his brethren, Nosferatu had always been motivated by interesting experiences, as far as it didn't require a lot of effort on his part. This, as far as he was concerned, was topping them all. An actual alien device: it was science fiction come true! He considered what the first words should be. "Hi!" didn't work so well, so he was cycling through alternatives: "Hello!", "Welcome!", maybe "Greetings and salutations!" which sounded oh-so very cool.

Nosferatu did not really fear destruction at the hand (tentacle?) of the alien, since he didn't fear death. Technically he had no life, of course, but it went deeper than that: he considered everything a game. When the game ended, it just ended. He never considered it as a threat or something to be afraid of. The only real fear was that of losing. Defined as such, the purpose of the game was to successfully establish communication with the alien and maybe convince it to not destroy the Earth. Although, he had to admit, seeing the world end was also interesting.

When the helicopter arrived, the large mass of moving tentacles moved frantically, menacingly even, like a bowl of furiously boiling pasta. Nosferatu instantly disliked the alien's appearance. As they approached and eventually landed close to the device, the frothing stopped, the alien froze, the tentacles stooped, then just collapsed. The vampire approached, touched the long slender appendages, he even kicked some in frustration. The alien visitor had died, just like from an insidious disease: it had been agitated, then had collapsed and finally had stopped reacting. The odds for that, Nosferatu thought, were so low that it made it all ridiculous, pathetic even. The world getting destroyed would have been better.

The newly created Department of Earth Defense got most of the machine, for it was determined that indeed it was a machine, even if it looked like a living creature. The energy source, the weapons, the method of locomotion, they would all be researched by carefully international teams, the knowledge shared freely and equally between the eight most powerful races. The only part they couldn't care less about was the brain of the machine. It was obviously flawed, causing the machine to fail, but also impossible to trust. The best possible solution for any alien intelligence was to dispose of it as soon as possible. Nosferatu was tasked with doing this, mostly because it was against his express advice and everybody hated his kind anyway. He obediently filled all the paperwork, talked to all the people, personally delivered a weird looking device to the Hazardous Devices department and witnessed its destruction. His direct supervisor accompanied him at every step verifying that it all went according to orders and enjoying every moment of the vampire's anguish.

Luckily, Nosferatu's boss didn't know an alien device from a geek garage project and so N'N'na'vi's brain was saved a fiery death. Back in his garage, the vampire would attempt to finish the game.

Back on the Moon, the N'na'vi had come up with a theory that explained everything that had happened. Clearly their glorious civilization was blindsided by someone as devious, if not more - scary thought, as them. Others have had the same idea as them, creating a fake that was able to explore the blue planet. Their obvious purpose had been to stage a covert attack on the Moon from the very location the N'na'vi would never assume an attack was even possible. Devious indeed, but not as clever as to fool them! They had learned a lot from creating the artificial N'na'vi, even if they had lost it in an obvious ruse. No matter, they could build others, and better.

The second model was larger, even more powerful, and designed as a hybrid of a N'na'vi and the (now they knew) alien machine that was still ravaging the narrow corridor around the Moon. It had limbs, like the alien, but it had tentacles like a Moon resident, some located around the "head" of the device while others closely knit together to form a programmable flexible sheet of material that would allow the machine to glide through atmospheres. This sheet was located on the back of the model, as to not hinder movement and obscure sensors. The first mission of this model, called the N3 as a clear reminder that it was not N'N'na'vi, was to grab Blozarg and throw him back to Earth, much to his hanger.

Various scientific workgroups were created in order to ascertain the origin location of the attackers. It was obvious, when you thought about it: there was no blue there. The sneak attack, probably just something to test their defenses, must have originated from Mars, or perhaps from a more habitable place, like one of the Martian moons. As soon as all the other theories were ridiculed into oblivion, a Martian offensive remained the only logical scientific theory explaining everything that had happened. A counter attack strategy was devised and plans were put in motion. The Mars moons were too insignificantly small to invade and there was the remote possibility that an extremophile form of life might even exist on the surface of the red planet. The simplest solution, as N'hair would have said himself, was to destroy the planet completely and for that they would need to upgrade the energy weapons on N3.

When DED was created, all the respective budgets of the other existing defensive departments were merged into one. When even the black budgets were added up, the resources allowed immediate research and development of space transportation and weaponry. The first ship, borrowing construction secrets from the Martian device valiantly captured by the Earth military forces, was christened Falas from the place where it was built. An elven name for sure, but simple enough that it wasn't obvious enough to cause anger with the other races. Military strategists developed plans to protect Earth from further attack, but then went further with devising a way to get to Mars itself. Equipped with five huge bombs, containing all the unopinium ever mined, Falas was redesigned as an attack vessel, capable of destroying the entire planet if need be or a huge space fleet if self destructing. Unexpectedly, having all the unopinium moved into orbit made Earth races much more amenable to compromise with each other. As such, and fueled by a most grievous action on the part of the enemy, they quickly reached an agreement on how to proceed.

Days after the launch of Falas, a troll came crashing on the planet, burning through the upper atmosphere, as an insult to the joined Earth defensive force. The trolls immediately decided the only possible reply was complete destruction of their enemy. Even Blorzgl, ravenously devouring a boar's roast while recovering from his ordeal, agreed with a hangry but dignified "Mmm-hmm" to the proposal to obliterate Mars since he never actually paid attention to where he was during his furious devastation. A quick analysis of the existing legal framework decided that obliterating Mars would not contaminate it, so international law not only allowed but actually supported the action, since also further contamination would become impossible after the mission.

Some protests came from cultural organizations claiming Mars as an important historical item. In the winter, a council of supporters met in Elrond and almost succeeded in derailing the plan, but for the subsequent assassination of the organizer, otherwise an honest and honorable man. "People like him always end up dead", the powers that be decided. Some concerns were raised that breaking up a planet would lead to asteroid bombardment of Earth, but thanks to the technology gleamed from the alien attacker, asteroids could be destroyed. Some astronomers complained about the knowledge that would be lost when tearing apart a planet we mostly know nothing about, but were mollified when given seats on the Falas, so they could observe the pieces when Mars broke up. "It's more efficient than drilling the surface", one of the scientists was heard saying. Elondriel committed suicide.

Meanwhile, Nosferatu had been working clandestinely and furiously on reactivating the alien machine processors. He had large quantities of blood stored in a special closet where he had chained but not killed a really fat man. As long as he fed him, the vampire always had a fresh supply of blood and, as the man was fat, he would survive quite a long time with water alone. At first, he determined several security vulnerabilities through which he could infiltrate the programming, but he had trouble going past the five time redundant antihacking mechanisms. Being an advanced civilization, the N'na'vi had long since cemented the practice of protecting their intellectual property first, no matter how little intellect had actually been used to create it. Even so, the vampire persevered and ultimately prevailed.

Turning the machine on was also a problem, since the power source had been taken by the defense department for reverse engineering. He ended up stealing as much power from his neighbors as possible, for fear of raising red flags with the power company.

The next problem he had to overcome, after he managed to interface Earth hardware with Moon hardware, was quite unexpected. He had linked all the inputs and outputs of the brain to a text console. However, in the interest of communication, he had also connected a video camera as an input. The problem? Nosferatu had a blue skin. It took some time to realize that the reason the alien machine was behaving so violently was his skin color. He had at first suspected wrong interface connections. When he had eliminated that, he believed the alien to be racist. By the time he figured out and installed a simple color filter on the camera, the N'na'vi electronic brain was partially insane. However, being an artificial brain, he was less sensitive as a real Moon inhabitant and more easy to fix by a hacker with the skills of Nosferatu.

In the end, communication was finally possible, while Nina (the vampire had decided N'N'na'vi was taking too long to type or pronounce) was by then a half and half Earth-Moon artificial intelligence.

Both civilizations independently decided to cloak their attack to the best of their abilities. After all, they only had one chance for it. Surely, generals on both sides thought, if we tardy too much or if we attack and fail, the next move of the enemy would be to totally destroy Earth. It would only make sense, coming from such a mindlessly aggressive opponent. Thus striking first was not only prudent, it was necessary, regardless of how it felt. History, in the end, would be the judge of their present decisions, they all said. With so much stealth and helped by orbital mechanics, the two fleets headed towards Mars at full speed, each oblivious of the existence of the other. Afraid of intelligence infiltration, they were also under strict radio silence. By the time Nosferatu busted several security firewalls to be able to stop the international council from ignoring his calls and messages, and by the time the communication Moon relay was convinced the disabled N'N'na'vi unit was not disabled, it was already too late. There was no stopping the destruction of Mars, even if either sides would have acknowledged the possibility that the other existed.

What actually happened was that Earth and Moon both decided they were under electronic and propagandist attack and actively protected themselves from any messages from the vampire. Nosferatu was considered compromised by DED and immediately an order for his arrest was issued. The N'na'vi knew him as Dracula, because the last audio communication they received repeated the word several times before they were able to block it. Luckily for the vampire, he had made sure the origin of his messages remained hidden.

Convinced that at any moment evil Martians might destroy them, Earth and Moon worked continuously on their defenses for the following six months, as well as space observatories focused on Mars. When the time came, the entire Earth system was watching the red planet, as the fleets prepared for attack. The light of the destructive forces pinned Mars as the brightest star on the firmament, yet no confirmation message came from either Falas or N3. Horrifyingly, Mars remained unscathed.

The Mars Hegemony ruled the Solar System for two centuries before an unfortunate solar event brought change to the situation. Seeing their most powerful attack being stopped with no effort whatsoever, Earth capitulated. After several weeks of deliberation a message from all the races in Middle Earth and the Dark Land, speaking as one, declared unconditional surrender to the forces of Mars. A similar message had been sent from the Moon almost immediately after the loss of contact with N3. When Mars responded, people breathed (or not breathed, depending on where they were) easier. The message was simple "From now one, you will service the Mars Hegemony. We will establish a base next to your planetary body to keep you in check. Any misstep and you will be destroyed. As a reward for your absolute obedience, we give you the Solar System. All these worlds are yours, except Mars. Attempt no landings there!".

It was hubris or maybe boredom that made Nosferatu risk the sun one day. He died two centuries after he had successfully formed the unknowing Earth-Moon alliance and no one will ever know why or how. He died in the sun and people never gave a second thought to it, other than dismiss the very old but still active warrant on his arrest. With the help of the alien brain he had hacked N3, managing to see what had happened. The people on Falas were terrified to see a huge ship in form of a troll with a heroic cape on its back. The most unsettling thing was the impossible blond curly hair it had on its ugly head. Without hesitation they fired unopinium bombs at it. As for the N3, it had been thoroughly programmed and tested to avoid past mistakes. In view of a giant space vessel of clear Earth origin, the machine could only surmise that its programming had become corrupted by the enemy. Life on Earth was hardcoded as impossible, so the only logical explanation was that Martians were tampering with its software. It immediately fired powerful beam weapons and then self destructed. On the completely disabled Falas, the artificial intelligence installed specifically for this purpose decided it was a no-win scenario and initiated self destruct. Both fleets were annihilated instantly.

The weird combination of Moon and Earth technology in Nosferatu's basement allowed him to receive both capitulation messages and also fake the origin of the reply. For two hundred years he manipulated the two civilizations towards exploring and later colonizing the Solar System, all while each thought they were working with and under the most powerful Martians. Nosferatu aka Dracula died, but it wasn't the end, only the beginning. With him gone, Nina continued to control the Mars Hegemony, in its own mechanical way, growing and becoming more and more at every step. The only reason why the most powerful intelligence in the existence of the Solar System didn't assimilate every living creature as a part of itself - the logical conclusion of its AI programming to optimize peace, exploration and the accumulation of knowledge - was its firm belief, fused somewhere in its basic circuitry where even a vampire hacker could not reach, that life on Earth and the Moon is ultimately... impossible.

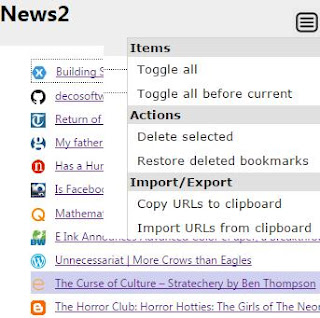

Bookmark Explorer, a Chrome browser extension that allows you to navigate inside bookmark folders on the same page, saving you from a deluge of browser tabs, has now reached version 2.4.0. I consider it stable, as I have no new features planned for it and the only changes I envision in the near future is switching to ECMAScript 6 and updating the unit test (in other words, nothing that concerns the user).

Bookmark Explorer, a Chrome browser extension that allows you to navigate inside bookmark folders on the same page, saving you from a deluge of browser tabs, has now reached version 2.4.0. I consider it stable, as I have no new features planned for it and the only changes I envision in the near future is switching to ECMAScript 6 and updating the unit test (in other words, nothing that concerns the user). I have been using

I have been using

I have been plagued by this thing for a few weeks: every time I turn on the Wi-Fi, then turn it off, something starts turning the Bluetooth on. Turn it off and it goes back up in a minute. The only solution was to turn off the phone and then back again without turning Wi-fi or Bluetooth on. Strangely enough, there is no way to disable Bluetooth on the phone and no way to know who turned it on last.

I have been plagued by this thing for a few weeks: every time I turn on the Wi-Fi, then turn it off, something starts turning the Bluetooth on. Turn it off and it goes back up in a minute. The only solution was to turn off the phone and then back again without turning Wi-fi or Bluetooth on. Strangely enough, there is no way to disable Bluetooth on the phone and no way to know who turned it on last. For a very long time the only commonly used expression of software was the desktop application. Whether it was a console Linux thing or a full blown Windows application, it was something that you opened to get things done. In case you wanted to do several things, you either opted for a more complex application or used several of them, usually transferring partial work via the file system, sometimes in more obscure ways. For example you want to publish a photo album, you take all pictures you've taken, process them with an image processing software, then you save them and load them with a photo album application. For all intents and purposes, the applications are black boxes to each other, they only connect with inputs and outputs and need not know what goes on inside one another.

For a very long time the only commonly used expression of software was the desktop application. Whether it was a console Linux thing or a full blown Windows application, it was something that you opened to get things done. In case you wanted to do several things, you either opted for a more complex application or used several of them, usually transferring partial work via the file system, sometimes in more obscure ways. For example you want to publish a photo album, you take all pictures you've taken, process them with an image processing software, then you save them and load them with a photo album application. For all intents and purposes, the applications are black boxes to each other, they only connect with inputs and outputs and need not know what goes on inside one another.

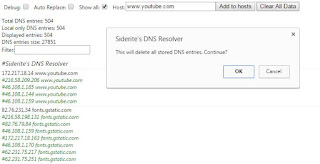

I have started writing Chrome extensions, mainly to address issues that my browser is not solving, like opening dozens of tabs and lately DNS errors/blocking and ad blocking. My code writing process is chaotic at first, just writing stuff and changing it until things work, until I get to something I feel is stable. Then I feel the need to refactor the code, organizing and cleaning it and, why not, unit testing it. This opens the question on how to do that in Javascript and, even if I have known once, I needed to refresh my understanding with new work. Without further ado:

I have started writing Chrome extensions, mainly to address issues that my browser is not solving, like opening dozens of tabs and lately DNS errors/blocking and ad blocking. My code writing process is chaotic at first, just writing stuff and changing it until things work, until I get to something I feel is stable. Then I feel the need to refactor the code, organizing and cleaning it and, why not, unit testing it. This opens the question on how to do that in Javascript and, even if I have known once, I needed to refresh my understanding with new work. Without further ado: